Introduction: The Real War Behind the AI Boom

Artificial Intelligence is no longer a futuristic concept; it is the driving force behind the most significant technological advancements of our time. From sophisticated large language models (LLMs) that power conversational AI and content generation to intelligent automation reshaping industries, AI’s pervasive influence is undeniable. While headlines often laud the latest AI applications and breakthroughs in model capabilities, the true foundation enabling this revolution often remains out of sight: the underlying AI infrastructure.

In 2025, the global AI market is not just expanding; it’s exploding. Projections indicate the overall artificial intelligence market size, valued at approximately $233.46 billion in 2024, is poised for massive growth, expected to reach $294.16 billion by 2025 and a staggering $1,771.62 billion by 2032, exhibiting a compound annual growth rate (CAGR) of 29.2% from 2025 to 2032. This phenomenal growth is not just in software and applications, but crucially, in the hardware and cloud services that underpin them. The real war, the one that will determine who truly dominates the AI era, is being fought on the battleground of infrastructure: who builds it, who controls its access, and ultimately, who profits from its immense power.

This article delves into the high-stakes struggle for AI infrastructure supremacy, exploring the formidable roles of cloud giants, the intense competition in the chip market, and the strategic maneuvers that will shape the future of AI.

Table of Contents

Who’s Controlling the AI Infrastructure in 2025?

The sheer computational demands of modern AI, particularly large language models (LLMs), have fundamentally reshaped the technological landscape. In 2025, controlling AI infrastructure means dominating the foundational elements that enable AI development, training, and deployment. This isn’t just about raw compute power; it encompasses a sophisticated interplay of specialized hardware, high-performance storage, ultra-low-latency networking, and robust model-serving platforms.

The core components of AI infrastructure can be broken down as follows:

Compute Power (AI Accelerators): This is the most visible and often discussed component, dominated by Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), and more specialized AI accelerators (ASICs, NPUs). These units are designed for parallel processing, critical for the massive matrix multiplications involved in AI model training and inference.

High-Performance Storage: AI models require access to colossal datasets for training, often petabytes in size. This necessitates highly scalable, high-throughput storage solutions like object storage, data lakes, and increasingly, vector databases optimized for embedding search.

Ultra-Low-Latency Networking: To efficiently distribute workloads across thousands of accelerators and move data to and from storage, extremely fast and reliable networking (e.g., InfiniBand, high-speed Ethernet) is indispensable, minimizing bottlenecks and maximizing utilization.

Model-Serving Platforms (MLOps): Beyond raw hardware, sophisticated software platforms are crucial for managing the entire AI lifecycle – from data preparation and model training to deployment, monitoring, and versioning. These platforms simplify the complexities of putting AI into production at scale.

In 2025, the entities wielding the most control over these components are the hyperscale cloud providers. They have invested billions in building vast, global networks of data centers equipped with cutting-edge AI hardware. Their scale, combined with their integrated software stacks, positions them as the de facto “invisible backbone” of the AI revolution.

For instance:

- Microsoft Azure is the primary infrastructure provider for OpenAI, the creator of GPT models. This deep partnership gives Azure a significant edge, as OpenAI’s continuous innovation directly translates into demand for Azure’s AI compute resources and specialized services. Azure offers a comprehensive suite of AI services, including Azure OpenAI Service, which provides REST API access to OpenAI’s powerful language models (like GPT-4o, GPT-3.5-Turbo, and their reasoning-focused ‘o3’ models) with Azure’s enterprise-grade security and compliance.

- Google Cloud is the foundational platform for Google DeepMind (their own leading AI research arm) and has a strategic partnership with Anthropic, the developer of the Claude series of LLMs. Google Cloud’s Vertex AI platform provides access to Google’s powerful Gemini models and offers a unified environment for building, training, and deploying ML models, leveraging their proprietary TPUs for optimized performance. Anthropic specifically leverages Google Cloud’s cutting-edge GPU and TPU clusters for training, scaling, and deploying its AI systems, highlighting the critical role of Google’s specialized hardware.

- Amazon Web Services (AWS) supports a vast ecosystem of AI developers and its own initiatives, including Amazon Bedrock (a fully managed service offering access to various foundation models, including Anthropic’s Claude 4 and AWS’s new Amazon Nova models) and Amazon SageMaker for end-to-end machine learning. AWS has made significant investments in its own custom AI chips (Trainium2 and Inferentia2) to optimize performance and cost for its vast customer base. In 2025, AWS doubled down on its Nvidia partnership, launching P6e-GB200 UltraServers based on Nvidia Grace Blackwell Superchips, demonstrating their commitment to offering leading-edge compute.

These cloud giants are not merely reselling hardware; they are providing integrated, scalable ecosystems that abstract away much of the underlying complexity, allowing businesses and developers to focus on building AI applications rather than managing complex infrastructure.

The Invisible Backbone: LLM Infrastructure Providers

Every major large language model, whether it’s OpenAI’s GPT-4o, Anthropic’s Claude 4, or Google’s Gemini, relies on a colossal distributed computing infrastructure to function. These models, with billions to trillions of parameters, demand unprecedented amounts of compute power for both their initial training (which can take months and cost tens to hundreds of millions of dollars) and their ongoing inference (serving live requests).

The cloud giants serve as the “invisible backbone” because they provide the scalable, on-demand access to the specialized hardware and software environments necessary for these models. Without the massive data centers, custom silicon, and sophisticated MLOps tools provided by Microsoft Azure, Google Cloud, and AWS, the current AI revolution simply wouldn’t be possible at its current scale and pace. They are not just hosting these models; they are enabling their very existence and rapid evolution by continuously pushing the boundaries of what infrastructure can deliver. The ability to abstract away the complexity of managing thousands of GPUs, ensuring optimal data flow, and providing robust security is what allows LLM developers to innovate at breakneck speed.

Nvidia Dominance and the Rise of Custom Chips

In 2025, no discussion about AI infrastructure is complete without acknowledging seemingly unshakeable Nvidia Dominance in the AI chip market. Their H100 Tensor Core GPU, based on the Hopper architecture, has cemented itself as the industry standard for high-performance AI training and inference. Priced at approximately $25,000 to $40,000 per unit (depending on the variant and vendor), the H100 remains in exceptionally high demand, with its entire 2025 production run reportedly pre-sold by late 2024. This reflects Nvidia’s incredible financial success, with data center sales accounting for the vast majority of its soaring revenue – indicating a significant transformation into primarily an AI data center supplier.

The H100’s supremacy stems from its unparalleled raw compute power, massive memory bandwidth (80GB HBM3 with 3 TB/s bandwidth for the SXM5 module), and critically, its mature and comprehensive software ecosystem, CUDA. CUDA provides developers with a robust set of tools, libraries, and frameworks that have become the de facto standard for AI development, creating a significant “moat” that is difficult for competitors to overcome.

However, Nvidia Dominance as a near-monopoly, while highly profitable for the company, has created challenges for the broader AI industry. The exorbitant cost and limited availability of H100s have led to a bottleneck in AI development, particularly for startups and smaller enterprises. This scarcity has fueled intense competition and driven up prices, even for cloud-based instances, where H100 rental rates can range from $1.65 to over $11 per hour.

This environment has, in turn, spurred significant investment and innovation from competitors seeking to chip away at Nvidia Dominance, both in terms of alternative hardware and software ecosystems.

H100 vs MI300: The Battle Heats Up

The most prominent challenger in 2025 is AMD with its Instinct MI300X accelerator, built on the CDNA 3 architecture. The MI300X has been strategically positioned to directly compete with the H100, especially for large language model inference workloads where memory capacity is paramount.

| Feature | NVIDIA H100 (SXM5) | AMD MI300X |

|---|---|---|

| Architecture | Hopper | CDNA 3 |

| Memory Capacity | 80GB HBM3 | 192GB HBM3 |

| Memory Bandwidth | ~3.35 TB/s | ~5.3 TB/s |

| Peak FP16 Perf. | ~990 TFLOPs (dense) | ~1.3 PFLOPs (dense) |

| Sparsity Perf. | ~1980 TFLOPs | ~2.6 PFLOPs |

| Key Advantage | Mature software ecosystem (CUDA), strong training perf | Superior memory & bandwidth, great for large-model inference |

| Software Stack | CUDA, cuDNN, TensorRT | ROCm (growing, open-source) |

| Availability | High demand, long lead times | Improving availability, gaining traction |

The MI300X boasts a significant advantage in memory capacity (192GB vs. 80GB) and memory bandwidth, which is crucial for handling massive LLMs that often exceed the memory capacity of a single H100. This translates to a notable latency advantage for the MI300X in certain LLM inference tasks, particularly with large models like LLaMA2-70B. AMD’s ROCm software platform, while still maturing compared to CUDA, is gaining traction, driven by its open-source nature and increasing support for popular AI frameworks.

Intel is also a significant player, particularly with its Gaudi series of AI accelerators. The Intel Gaudi 3 (launched in late 2024 / early 2025) is Intel’s direct answer to the H100 and MI300X, showing competitive inference throughput for LLMs like LLaMA 3 8B, in some benchmarks even exceeding H100 performance. Intel is leveraging its extensive enterprise relationships and the growing demand for diverse AI hardware to position Gaudi as a viable alternative.

Google’s Tensor Processing Units (TPUs), while primarily used internally by Google Cloud and its partners (like Anthropic), represent another formidable custom chip architecture. The latest iterations, such as TPU v5e and TPU v5p, are designed for extreme energy efficiency and cost-effectiveness for training and inference, especially at scale. While a single H100 might offer higher raw throughput, Google claims its TPU v5e can achieve similar performance for GPT-3 benchmarks at a significantly lower cost per hour compared to H100 clusters, especially for training large models.

The AI Chip Market Is Heating Up

Nvidia Dominance – virtual monopoly, particularly for high-end AI training chips, has created significant pricing issues. The high price of H100s, far exceeding their manufacturing cost (estimated around $3,320 per unit), allows Nvidia to maintain massive profit margins. This has led to concerns about vendor lock-in and a limited supply, pushing cloud providers and major AI labs to explore alternatives and even develop their own custom silicon.

The heating AI chip market is not just about the major players. A new wave of startups is attracting significant investor interest by focusing on novel architectures and specialized AI acceleration. Companies like Tenstorrent and Cerebras are pushing the boundaries with unique approaches.

- Cerebras Systems, known for its Wafer-Scale Engine (WSE) chips, which are the largest chips ever built, aims to tackle the memory and communication bottlenecks of distributed GPU clusters by putting an entire neural network onto a single, massive chip. As of July 2025, Cerebras continues to be a privately held company with a valuation around $4.7 billion and is exploring IPO opportunities. Their strategy targets extreme-scale AI problems where traditional GPU clusters might be inefficient.

- Tenstorrent, led by chip design veteran Jim Keller, is focusing on RISC-V based AI processors designed for scalability and efficiency across various workloads, from data centers to edge devices. Tenstorrent has continued to raise significant capital in 2025, attracting attention for its open-source approach and ambitious chip designs that challenge conventional architectures.

These startups are interesting to investors because they promise to disrupt the existing paradigm, offering potential breakthroughs in performance-per-watt, cost-efficiency, and flexibility, which could democratize access to advanced AI compute and reduce reliance on a single vendor. The shift towards custom chips is not merely a preference; it’s an economic imperative driven by the relentless pressure to improve size, weight, power, and cost (SWaP+C) for AI workloads, especially at hyperscale.

Cloud Computing and AI: The Strategic Nexus

The advent of large-scale AI, particularly generative AI, has not merely become another workload for cloud providers; it has fundamentally reshaped their infrastructure planning and development strategies. In 2025, cloud computing isn’t just a platform for AI; it’s the very crucible where AI is forged and deployed at scale. The demand for AI-optimized infrastructure is stretching the capacity of even the largest cloud providers, forcing them to increase investments at an unprecedented rate. Global cloud infrastructure spending saw a significant 21% increase in Q1 2025 alone, reaching $90.9 billion, largely driven by the surging enterprise demand for embedding AI into everyday workflows.

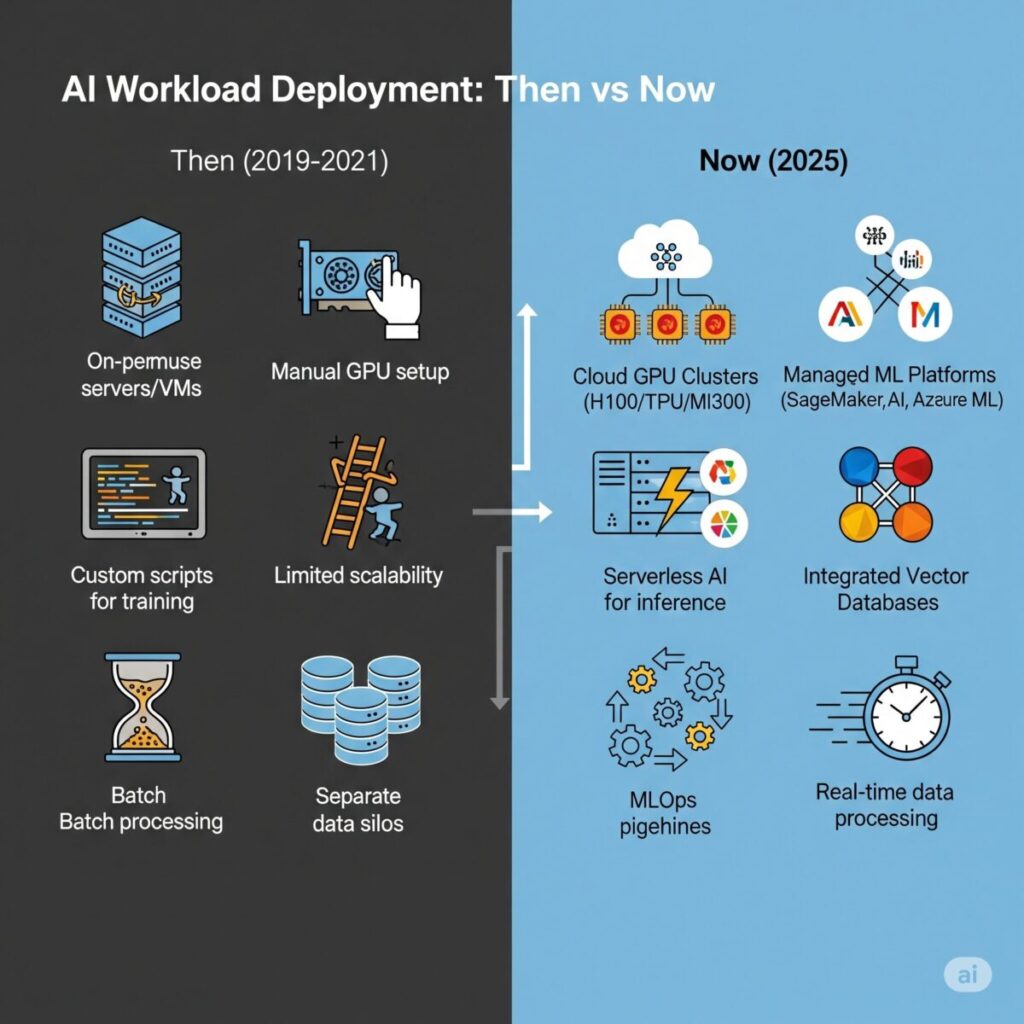

AI workloads are distinct from traditional computing tasks. They are characterized by:

- Massive data requirements: Training large models demands petabytes of data, requiring high-throughput storage solutions.

- Intense computational needs: Both training and inference are computationally intensive, requiring specialized accelerators like GPUs and TPUs.

- Burstiness and scalability: Training can involve peak, sustained demand, while inference requires rapid, elastic scaling to meet fluctuating user requests.

- Low-latency demands: Real-time AI applications, like conversational AI, necessitate ultra-low latency for an effective user experience.

These unique requirements have pushed cloud providers to innovate aggressively, moving beyond generic virtual machines to offer highly specialized services tailored for AI. This includes the proliferation of GPU clusters, the rise of serverless AI, and the critical emergence of vector databases.

Specialized Offerings by Cloud Giants:

- GPU Clusters: AWS, Google Cloud, and Azure have been in a race to deploy the latest and most powerful GPU clusters.

- AWS offers a wide range of GPU-powered instances through its Elastic Compute Cloud (EC2), including the latest NVIDIA H100s (P5 instances), A100s (P4 instances), and their custom Trainium (for training) and Inferentia (for inference) chips, optimizing for both performance and cost. AWS has also partnered with Nvidia to offer Blackwell-based instances in 2025.

- Google Cloud Platform (GCP) provides deep integration with its proprietary Tensor Processing Units (TPUs), optimized for TensorFlow and JAX workloads, alongside NVIDIA GPUs. Their highly flexible VM configurations allow users to attach various GPU types to custom CPU/memory setups, offering fine-grained control for AI workloads.

- Microsoft Azure offers a robust selection of NVIDIA GPU VMs (including H100s and A100s), often integrating them seamlessly with their Azure Machine Learning platform. They also offer specialized services like Azure ML Compute for managed GPU clusters, ensuring enterprise-grade security and scalability. Oracle Cloud Infrastructure (OCI) has also become a major player in offering Nvidia GPU clusters, attracting large AI companies with competitive pricing and scale.

- Serverless AI: This paradigm allows developers to run AI inference or even micro-training tasks without managing underlying servers, automatically scaling resources up and down based on demand.

- AWS Lambda can trigger AI model inference, integrating with services like Amazon SageMaker for model deployment. Services like Amazon Bedrock also offer a serverless experience for foundation models.

- Azure Functions provide similar serverless capabilities, enabling event-driven AI applications that can call Azure Cognitive Services or custom models deployed on Azure ML.

- Google Cloud Functions and Cloud Run allow for scalable, serverless deployment of AI models, often leveraging pre-built containers for common ML frameworks. Google Cloud’s AI Platform Prediction further simplifies serverless model serving.

- Vector Databases: The rise of Retrieval-Augmented Generation (RAG) and semantic search has made vector databases a crucial component of modern AI stacks. These databases store vector embeddings (numerical representations of data) and enable lightning-fast similarity searches, essential for providing context to LLMs and powering intelligent search experiences.

- Cloud providers now offer or tightly integrate with leading vector database solutions. Pinecone, Weaviate, Qdrant, and Milvus are prominent players, with Pinecone offering a fully managed serverless solution that is widely adopted.

- Google Cloud’s Vertex AI Matching Engine (part of Vertex AI) is a fully managed service for large-scale nearest neighbor search, essentially Google’s proprietary vector database offering.

- While AWS and Azure don’t have their own first-party fully managed vector databases in the same vein as Pinecone or Vertex AI Matching Engine, they provide robust support and integrations for deploying popular open-source (e.g., Milvus, Weaviate) or commercial (e.g., Pinecone) vector databases on their infrastructure. They also offer services like Amazon OpenSearch Service and Azure Cosmos DB which have added vector search capabilities.

The strategic imperative for cloud providers in 2025 is to offer a comprehensive, integrated suite of AI-optimized services that cover the entire AI lifecycle, from data ingestion and preparation to model training, deployment, and monitoring. This ensures that their customers, from startups to large enterprises, can build and scale AI applications efficiently without needing to manage the complex underlying hardware and software.

Microsoft vs Amazon AI: A Cloud Clash

The competition between Microsoft Azure and Amazon Web Services (AWS) for AI dominance is arguably the most significant cloud clash of 2025. Both hyperscalers are leveraging their massive scale, global reach, and deep pockets to attract and retain AI workloads, albeit with slightly different strategic advantages.

Microsoft Azure’s Edge with OpenAI: Azure’s crown jewel in the AI race is its exclusive and profound partnership with OpenAI. This collaboration gives Azure a unique and privileged position in the generative AI space. OpenAI’s models, including GPT-4o, DALL-E 3, and their cutting-edge reasoning-focused models, are developed and primarily hosted on Azure’s infrastructure. This translates into several key advantages for Azure:

- First-mover access to cutting-edge models: Azure customers get early and often preferential access to OpenAI’s latest models via the Azure OpenAI Service, which wraps these powerful APIs with Azure’s enterprise-grade security, compliance, and management features. This simplifies integration for businesses, allowing them to leverage state-of-the-art AI without managing the underlying complexities.

- Co-development and optimization: The partnership isn’t just about hosting; it involves deep technical collaboration, leading to highly optimized performance of OpenAI models on Azure’s specific hardware (including custom Microsoft AI chips like Maia, which are expected to roll out more broadly in 2025).

- Enterprise appeal: Microsoft’s long-standing relationships with enterprises, combined with the seamless integration of Azure AI services into existing Microsoft products (like Microsoft 365 Copilot, Dynamics 365, and Power Platform), make Azure a compelling choice for businesses looking to infuse AI into their established workflows. The Azure AI Foundry is a key offering, enabling large organizations to build, train, and deploy custom large-scale AI models.

Amazon Web Services (AWS) with Bedrock and Anthropic: AWS, while initially appearing to be catching up in the generative AI race, has rapidly accelerated its strategy, leveraging its vast existing customer base and extensive service catalog. AWS’s approach focuses on providing a broad choice of foundation models and robust MLOps capabilities, emphasizing flexibility and control for developers.

- Amazon Bedrock: This fully managed service is AWS’s direct answer to the need for accessible foundation models. Bedrock offers a “model as a service” approach, providing access to a range of leading FMs from Amazon (e.g., the new Amazon Nova models, Titan models), AI21 Labs, Cohere, Stability AI, and crucially, Anthropic’s Claude 4 (which has become a major competitor to OpenAI’s GPT series). Bedrock’s strength lies in allowing customers to easily experiment with different models, fine-tune them with their own data, and integrate them into applications using a consistent API.

- Amazon SageMaker: AWS continues to build upon SageMaker, its comprehensive machine learning platform, allowing developers to build, train, and deploy custom ML models at scale. SageMaker offers a vast array of tools, including managed Jupyter notebooks, data labeling, feature stores, and MLOps capabilities.

- Proprietary Chips (Trainium and Inferentia): AWS is heavily investing in its custom silicon, Trainium for training and Inferentia for inference. These chips are designed to offer superior price-performance for specific AI workloads running on AWS, reducing reliance on third-party silicon and providing cost efficiencies for customers. The latest Trainium2 chips are competitive with Nvidia’s offerings in certain large-scale training scenarios.

- Deep Customer Base and Ecosystem: AWS’s massive market share and deep penetration across industries give it a significant advantage. Its extensive network of partners, developer tools, and the sheer breadth of its services make it a sticky platform for many enterprises embarking on their AI journey.

Side-by-Side Comparison: NVIDIA H100 vs AMD MI300X: AI Chip Showdown

| Feature | NVIDIA H100 (SXM5) | AMD MI300X |

|---|---|---|

| Architecture | Hopper | CDNA 3 |

| Memory Capacity | 80 GB HBM3 | 192 GB HBM3 |

| Memory Bandwidth | ~3.35 TB/s | ~5.3 TB/s |

| Peak FP16 Performance | ~990 TFLOPs (dense) | ~1.3 PFLOPs (dense) |

| Sparsity Performance | ~1980 TFLOPs | ~2.6 PFLOPs |

| Key Advantage | Mature CUDA ecosystem, strong for training, lower memory latency | Higher memory & bandwidth, efficient at inference, better at large batch sizes |

| Software Stack | CUDA, cuDNN, TensorRT | ROCm (open-source, gaining adoption) |

| Availability | High demand, limited supply, long lead times | Improving supply, increasingly adopted |

In 2025, the “cloud clash” isn’t about one clear winner across the board. Azure capitalizes on its OpenAI advantage and enterprise relationships, while AWS leverages its unparalleled scale, diverse model offerings, and cost-effective custom silicon. The competition is driving rapid innovation and ensuring that businesses have increasingly powerful and accessible AI infrastructure options.

The Startup Dilemma: AI Compute Wars & Cloud Lock-In

For burgeoning AI startups, the battle for AI infrastructure presents a significant dilemma. While the promise of AI innovation is boundless, the practical reality of acquiring and managing the necessary compute resources is a formidable hurdle. This environment has fostered what can be described as the “AI compute wars,” where access to powerful and affordable processing power is often the primary bottleneck, and the specter of “cloud lock-in” looms large.

New AI startups, particularly those focused on developing foundational models or compute-intensive AI applications, are almost invariably forced to pick sides amongst the major cloud providers (AWS, Azure, GCP). The upfront cost of building their own data centers with hundreds or thousands of GPUs is prohibitive, often running into hundreds of millions or even billions of dollars. This makes cloud infrastructure the only viable path to scale.

However, choosing a cloud partner is a high-stakes decision with long-term implications. Once a startup commits its significant AI workloads to a particular cloud provider, it becomes deeply embedded within that provider’s ecosystem. This integration extends beyond just compute; it includes their specific MLOps tools, storage solutions (like proprietary vector databases), networking configurations, and even their unique API structures for accessing foundation models. This creates a powerful cloud lock-in: the higher the investment in a specific cloud’s services, the more difficult and costly it becomes to migrate to another provider.

This lock-in manifests in several ways for AI startups:

- Financial Commitment: While cloud services offer flexibility, large-scale AI training can incur massive costs. Startups often sign long-term contracts or make significant reservations to secure discounted rates and guaranteed access to scarce GPU resources. Breaking these commitments can result in substantial penalties.

- Data Gravity: As AI models train on vast datasets, moving these petabytes or exabytes of data between cloud providers can be incredibly time-consuming, expensive (due to egress fees), and technically complex.

- Operational Integration: MLOps pipelines, data pipelines, and application logic become tightly coupled with cloud-specific services. Re-architecting these to fit a different cloud’s paradigm requires significant engineering effort and time, diverting resources from core AI development.

- Skill Set Specialization: Engineering teams develop expertise in a particular cloud provider’s tools and services. Shifting providers would necessitate retraining or hiring new talent, adding to operational overhead.

Examples of Strategic Cloud Partnerships:

The strategic alliances between major AI labs and cloud giants underscore this dilemma:

- Anthropic on AWS: Anthropic, a leading developer of frontier AI models (Claude series), has solidified a major partnership with Amazon Web Services. This collaboration, which includes an expanded $4 billion investment from Amazon (bringing their total investment to $8 billion), establishes AWS as Anthropic’s primary cloud and training partner. Anthropic leverages AWS’s advanced GPU and TPU (Trainium and Inferentia) clusters for training and deploying its Claude models. This deep integration means Claude models are now core infrastructure within Amazon Bedrock, simplifying deployment for tens of thousands of enterprises. This strategic choice allows Anthropic to scale its ambitious research without the burden of building and managing physical infrastructure, while providing AWS with a cutting-edge LLM to offer its vast customer base.

- OpenAI on Azure: Similarly, OpenAI, the creator of the GPT series, has a long-standing, multi-billion dollar partnership with Microsoft Azure. OpenAI has committed to using Azure as its primary cloud provider for all its research, development, and production workloads. This partnership gives Azure exclusive cloud distribution rights for OpenAI’s frontier models through the Azure OpenAI Service. For OpenAI, Azure provides the massive supercomputing capabilities required for training models like GPT-4o, while for Microsoft, it cements Azure’s position as a leading AI cloud, deeply integrating OpenAI’s capabilities into its enterprise offerings and boosting its competitive stance against AWS.

These partnerships, while providing necessary scale and resources for the AI innovators, also highlight the concentration of AI compute power within a few dominant cloud platforms. For smaller startups without the leverage of an OpenAI or Anthropic, securing sufficient, affordable compute remains a constant battle. They often face higher costs, limited access to the latest GPUs, and the pressure to quickly demonstrate value before their compute budget runs dry.

The AI compute wars thus extend beyond just the chipmakers; they encompass the fierce competition among cloud providers to attract and retain the most promising AI talent and startups, solidifying their long-term dominance over the foundational layer of the AI economy.

These partnerships, while providing necessary scale and resources for the AI innovators, also highlight the concentration of AI compute power within a few dominant cloud platforms. For smaller startups without the leverage of an OpenAI or Anthropic, securing sufficient, affordable compute remains a constant battle. They often face higher costs, limited access to the latest GPUs, and the pressure to quickly demonstrate value before their compute budget runs dry.

The AI compute wars thus extend beyond just the chipmakers; they encompass the fierce competition among cloud providers to attract and retain the most promising AI talent and startups, solidifying their long-term dominance over the foundational layer of the AI economy.

What This Means for Investors, Founders, and the Future

The escalating battle for AI infrastructure in 2025 carries profound strategic implications across the entire technology ecosystem, reshaping opportunities and challenges for investors, founders, and ultimately, the future of AI’s integration into society.

For Investors: Focus on Infra Startups or Chip Plays Investors are recalibrating their portfolios to align with the foundational shifts in AI. While the allure of flashy AI applications remains, smart money is increasingly flowing into the underlying infrastructure.

- Infrastructure Startups: The “picks and shovels” analogy holds true. Companies developing innovative solutions for AI infrastructure – whether in specialized hardware, efficient data management (e.g., advanced vector databases, data orchestration), MLOps tools, or novel networking technologies – are becoming highly attractive. These companies provide essential building blocks that transcend specific AI models or applications, offering more diversified growth potential. Investors are looking for startups that can optimize for cost, efficiency, and scalability in AI compute.

- Chip Plays: The “chip wars” are far from over, and beyond Nvidia, there’s significant room for growth. Companies like AMD and Intel, which are actively challenging Nvidia’s dominance, present opportunities, especially as their competitive offerings mature and gain market share. Furthermore, investors are keenly eyeing the custom silicon initiatives by cloud giants (AWS Trainium, Google TPUs, Microsoft Maia) and specialized AI chip startups (e.g., Tenstorrent, Cerebras) that aim to provide niche performance advantages or disrupt the cost structure of AI compute. The sheer capital expenditure of hyperscalers on AI infrastructure – estimated by Bloomberg to reach around $200 billion collectively in 2025 for major tech companies, with over $90 billion incremental spending dedicated mostly to Gen AI – underscores the sustained demand for these foundational technologies.

For Founders: Think Twice Before Choosing Your Cloud Partner For AI startup founders, the choice of cloud partner is no longer a trivial decision; it’s a strategic alliance that can define their trajectory. The risk of “cloud lock-in” is real, and moving between providers once deeply integrated is akin to changing an airplane’s engine mid-flight.

Founders must consider:

- Cost vs. Performance vs. Flexibility: Evaluate which cloud offers the best balance for their specific AI workloads. Is raw performance for training paramount, or is cost-efficient inference at scale the priority? How much flexibility do they need to switch models or integrate open-source solutions?

- Ecosystem and Tools: Assess the maturity of the cloud provider’s MLOps tools, data services, and developer ecosystem. Will the chosen cloud support their entire AI lifecycle, from data labeling to model deployment and monitoring?

- Strategic Partnerships: For frontier AI model developers, forming a deep partnership with a cloud giant (like OpenAI with Azure, or Anthropic with AWS) can provide the necessary capital, compute, and distribution. For application-layer startups, the decision might hinge on existing customer relationships, developer familiarity, or specific feature sets.

- Future-Proofing: While complete vendor agnosticism is challenging, founders should architect their solutions with some level of modularity and API abstraction to mitigate future lock-in risks, particularly as the AI landscape evolves rapidly.

For Consumers: Expect Delays or Cost Increases in AI-Based Products While AI promises unprecedented innovation, the underlying infrastructure battle can have tangible impacts on end-consumers:

- Cost Increases: The high cost of specialized AI chips and the intense demand for cloud compute can translate into higher operational expenses for AI companies. These costs may, in turn, be passed on to consumers in the form of more expensive AI-powered products and services. For instance, the costs of training frontier models run into the hundreds of millions, and while inference costs are falling for smaller models, the overall demand for high-end compute keeps prices elevated.

- Service Limitations or Delays: The scarcity of cutting-edge GPUs and the sheer demand for compute can lead to “AI compute rationing.” This might result in longer wait times for AI-powered features, slower performance during peak usage, or a limited rollout of advanced AI capabilities to a select few, impacting the democratization of AI. For example, a chatbot might be slower, or a generative AI image service might have higher pricing tiers for faster generation.

- Innovation Pace: While competition drives innovation, infrastructure bottlenecks can slow down the development and deployment of truly transformative AI applications. Companies might be limited not by their ideas, but by their ability to access the necessary compute power.

As Satya Nadella, CEO of Microsoft, reflected in early 2025, tempering some of the AI hype, the challenge for the industry is to ensure that massive AI investments translate into tangible economic value. “AI is not creating real value yet,” he stated, urging a focus on measurable productivity and economic growth rather than just chasing abstract concepts like Artificial General Intelligence. This underscores the need for the infrastructure to deliver on its promise of enabling widespread, impactful AI.

Conversely, Jensen Huang, CEO of Nvidia, continues to emphasize AI’s transformative potential as a “great equalizer.” He posits that AI makes programming more accessible by allowing people to use natural language, effectively democratizing technological creation. However, this democratized access still hinges on the underlying, highly centralized, and capital-intensive infrastructure.

Conclusion: This Isn’t Just About AI — It’s About Control

The year 2025 undeniably marks a pivotal moment in the evolution of Artificial Intelligence. Beyond the dazzling applications and the rapid advancements in model capabilities, the true frontier of competition is the AI infrastructure that underpins it all. This isn’t just a technical race; it’s a strategic battle for control over the very foundation of the digital economy.

The cloud giants – Amazon, Microsoft, Google – are pouring unprecedented billions into building and maintaining their vast AI supercomputing facilities, recognizing that whoever controls the compute power, the data storage, and the optimized software stacks will dictate the pace and direction of AI innovation. Simultaneously, the “chip wars” are intensifying, with AMD and Intel challenging Nvidia’s formidable lead, and custom silicon becoming a crucial differentiator for cloud providers.

The real winners of 2025 will not solely be the companies developing the most intelligent algorithms or the most popular AI applications. Instead, long-term dominance will accrue to those who own, control, and efficiently scale the foundational infrastructure. This includes the providers of the specialized chips, the architects of the massive data centers, and the developers of the integrated cloud platforms that serve as the indispensable backbone for every AI endeavor. Their strategic decisions today will determine the accessibility, cost, and ultimate trajectory of AI for decades to come. The hidden battlefield of AI infrastructure is where the future is truly being decided.

Subscribe to our newsletter for deeper insights on the AI infrastructure race and its impact on technology, business, and society.