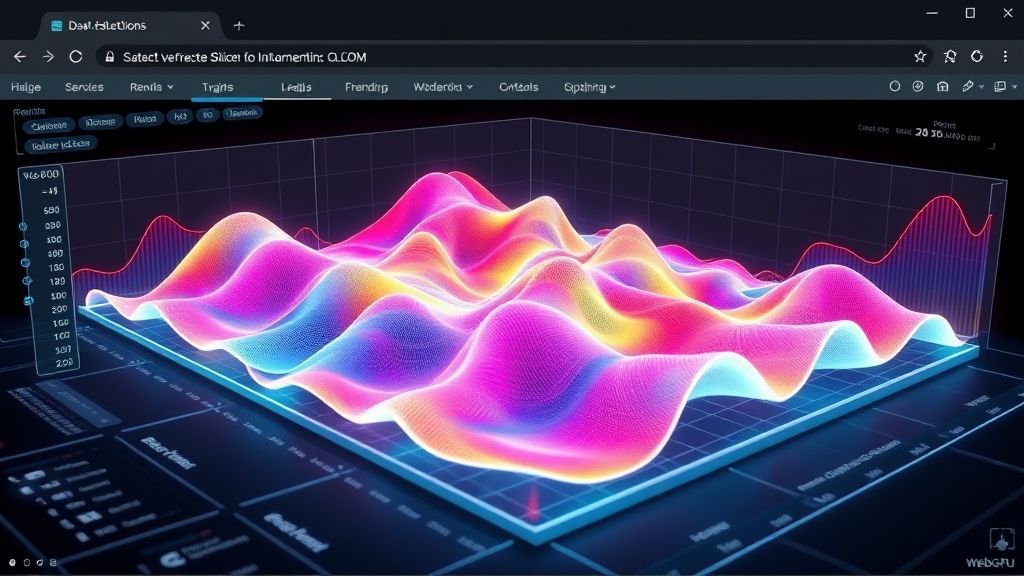

“Forget static charts — render full 3D volumes right inside your browser.”

Want to slice through 3D datasets in real time — right in your browser?

Thanks to WebGPU, what once needed powerful desktop software can now run natively inside Chrome or Firefox.

TL;DR

- WebGPU is transforming browser-based 3D rendering

- No more server-side crunching — render 3D volumes fully client-side

- Outperforms WebGL with native-like smoothness

- Try it yourself: interactive demos prove the speed

“Imagine exploring a 3D medical scan right inside your browser — slicing, rotating, zooming — all in real-time, with zero lag. Until recently, that dream was held back by WebGL’s limitations. But WebGPU is here to change everything. Welcome to the next generation of browser-native 3D data visualization.”

Table of Contents

Why Web-Based 3D Data Visualization Still Sucks (And How WebGPU Fixes It)

Visualizing complex 3D datasets — like medical imaging (MRI, CT scans), seismic models, or large-scale simulations — is crucial for research, engineering, and tech. But web-based tools have always struggled here.

Most of today’s interactive visualizations rely on WebGL, a powerful but aging technology based on OpenGL ES 2.0 — tech that dates back to 2007. That’s a lifetime ago in GPU terms.

The limitations of WebGL quickly surface when rendering:

- 🔄 Huge datasets (>10 million points or large 3D volumes)

- ⚡ Real-time interactivity

- 🧠 Client-side computation (like filtering, raycasting, etc.)

- 🎛️ Dynamic controls for slicing, thresholding, or coloring

Result? Sluggish performance. Laggy controls. Limited interactivity. And sometimes — total crashes.

Imagine a doctor exploring a volumetric 3D brain scan on a browser-based tool. Every time they rotate the model or adjust a threshold slider, it lags for a second or more. This delay breaks the workflow and reduces trust in the tool.

Demo:

🔗 Try this WebGL-based volume renderer — and notice the lag. Great for comparing with WebGPU’s smoothness: https://kitware.github.io/vtk-js/examples/VolumeRendering.html

📽️ Alternatively, check out this video demo comparing WebGL vs WebGPU rendering in real time. Credit: “WebGPU vs WebGL: What is the Future of Web Rendering?” by JavaScript Mastery

WebGPU: The Next-Gen Graphics API Built for the Modern Web

WebGPU is a modern graphics and computation API for the web, designed from the ground up to unlock GPU power directly in the browser.

Unlike WebGL, which is based on OpenGL ES (an older, fixed-function pipeline), WebGPU is modeled after Vulkan, Metal, and Direct3D 12 — high-efficiency APIs used in modern games and pro apps.

🧠 Key WebGPU Advantages:

| Feature | WebGL | WebGPU |

|---|---|---|

| Based on | OpenGL ES | Vulkan / Metal / Direct3D12 |

| Pipeline | Fixed-function | Fully programmable |

| Compute shaders | ❌ Not natively supported | ✅ Built-in |

| Performance | Moderate | ⚡ High-efficiency |

| Memory management | Limited | Advanced (more control) |

🚀 Performance Gains:

WebGPU reduces CPU-GPU bottlenecks and gives developers lower-level control for:

- More efficient draw calls

- Parallel computations on the GPU

- Complex, real-time effects without needing the server

🔥 Compute Shaders: WebGPU’s Secret Weapon

One of the most exciting parts? Compute shaders — programs that run directly on the GPU for tasks like:

- Voxel classification

- Real-time filtering

- Dynamic slicing

- Physics simulations

This means your app can manipulate 3D data on the client side, with zero server delay.

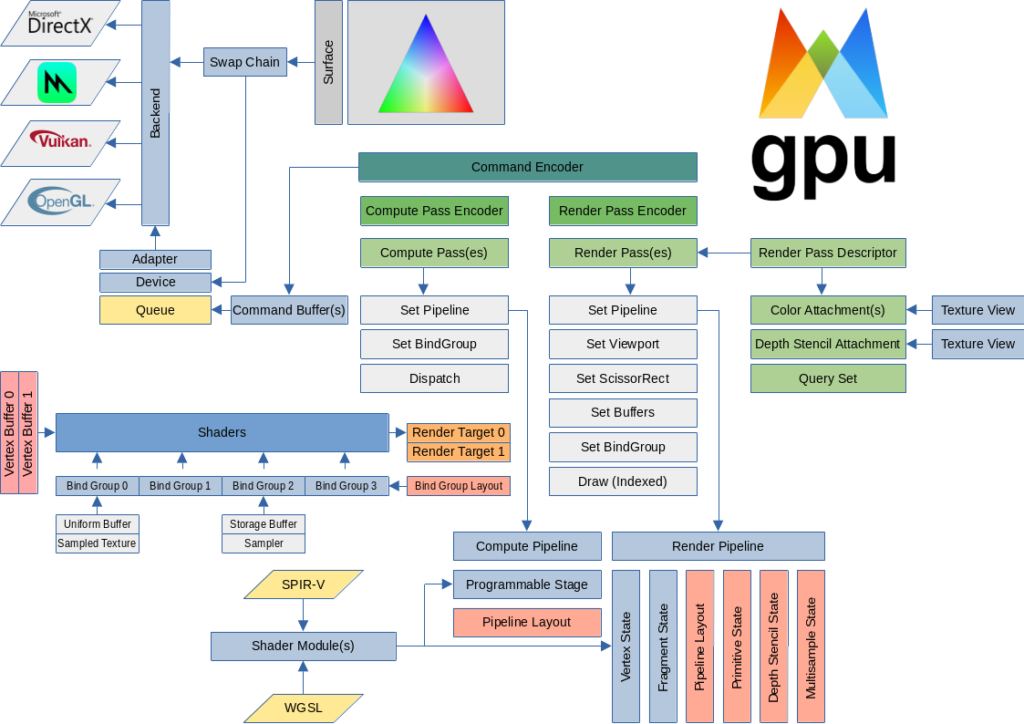

📊 Architecture Diagram:

pgsqlCopyEdit

+---------------------------+ +---------------------------+

| WebGL | | WebGPU |

+---------------------------+ +---------------------------+

| JavaScript API (OpenGL ES)| | JavaScript API (Modern) |

| -> Limited access to GPU | | -> Low-level GPU Access |

| -> No compute shaders | | -> Compute + Graphics |

+---------------------------+ +---------------------------+

↓ ↓

Driver Native GPU

Backend (Vulkan, Metal, DX12)

🧪 Bonus Snippet: Minimal WebGPU Init

javascriptCopyEditconst adapter = await navigator.gpu.requestAdapter();

const device = await adapter.requestDevice();

const canvasContext = canvas.getContext("webgpu");

canvasContext.configure({

device,

format: navigator.gpu.getPreferredCanvasFormat(),

});

That’s how easy it is to initialize a WebGPU device. From here, you can write GPU shaders and manage buffers just like in Vulkan.

WebGPU brings the full power of modern GPUs to your browser, opening the door to real-time 3D visualization that was previously impossible with WebGL. It’s not just an upgrade — it’s a new era.

Why WebGPU Is a Game-Changer for Complex 3D Visualization

Real-time 3D visualization of complex data — like medical CT scans, seismic data, or fluid simulations — has always been painfully slow in the browser.

WebGL simply wasn’t built for massive data processing. You often need:

- Preprocessed data from a server

- Laggy interactions and delayed updates

- Limited control over raw GPU memory and compute

🧠 Why WebGPU Matters:

With WebGPU, all of that changes.

Instead of waiting for the server to slice your dataset or render images remotely, you can now do it live in the browser, with smooth interaction and GPU-accelerated rendering.

Imagine this:

- 💡 Scroll to slice through a volumetric MRI scan

- 🎨 Interactively change color maps based on thresholds

- 🔍 Zoom and rotate through millions of data points

- 🌀 Raymarch through volume datasets with real-time updates

All happening locally, in real-time, with no server round-trips.

🔧 Behind the Magic – Compute Shaders at Work

WebGPU’s compute shaders let you:

- Process large 3D textures (voxels, point clouds, volume datasets)

- Implement dynamic raymarching/raycasting algorithms

- Perform client-side filtering, smoothing, or slicing

- Animate and transform data in real-time

✨ Sample Use Case: Dynamic Volume Rendering

Let’s say we want to visualize a 3D CT scan stored as a 256×256×256 voxel dataset.

With WebGPU, we can:

- Load the voxel data into a GPU buffer.

- Use a compute shader to perform thresholding and isosurface extraction.

- Use raymarching in a fragment shader to render it in real time.

- Let users adjust the threshold and color maps interactively.

🔍 Sample Shader Snippet: Basic Compute Shader Setup

wgslCopyEdit// WebGPU Shader (WGSL)

@group(0) @binding(0) var<storage, read> inputData : array<f32>;

@group(0) @binding(1) var<storage, write> outputData : array<f32>;

@compute @workgroup_size(64)

fn main(@builtin(global_invocation_id) global_id : vec3<u32>) {

let index = global_id.x;

outputData[index] = process(inputData[index]);

}

You can now do parallel processing across millions of data points in the browser itself!

🎯 Real-World Use Cases:

- Medical Imaging: Real-time MRI/CT slicing with isosurface detection

- Geospatial Analysis: Dynamic exploration of terrain/elevation data

- Scientific Simulations: Volumetric fluid dynamics or particle fields

- Engineering: Real-time CAD inspection, cross-sectioning, stress visualization

🧠 Key Takeaway:

WebGPU empowers your browser to behave like a GPU-native desktop app, handling complex, high-performance 3D data visualization with zero server-side dependencies.

It’s not just hype — this is the real potential WebGPU unlocks.

From Theory to Code: Rendering 3D Volume Data with WebGPU

🎯 Goal:

Render a 256×256×256 volume dataset (like a CT scan) using WebGPU, complete with real-time interactivity — zoom, rotate, slice, and adjust threshold.

🚀 What We’ll Cover:

- Setup WebGPU in the browser

- Load 3D volume data (e.g., raw binary)

- Create WebGPU buffers & textures

- Write compute + fragment shaders for raymarching

- Add interactive controls (threshold, slicing, zoom)

🧱 Step 1: WebGPU Setup

htmlCopyEdit<!-- index.html -->

<canvas id="gpu-canvas" width="800" height="600"></canvas>

<script type="module" src="main.js"></script>

javascriptCopyEdit// main.js

const canvas = document.getElementById("gpu-canvas");

const adapter = await navigator.gpu.requestAdapter();

const device = await adapter.requestDevice();

const context = canvas.getContext("webgpu");

const format = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format,

alphaMode: "opaque",

});

📦 Step 2: Load Volume Data

javascriptCopyEdit// Assume a Uint8Array representing 256³ voxels

const volumeSize = [256, 256, 256];

const response = await fetch('volume-data.raw');

const voxelData = new Uint8Array(await response.arrayBuffer());

🧠 Step 3: Upload Volume to GPU as 3D Texture

javascriptCopyEdit

// Note: bytesPerRow must be aligned to 256 bytes.

// Use a helper if needed.const volumeTexture = device.createTexture({

size: volumeSize,

format: 'r8unorm',

usage: GPUTextureUsage.TEXTURE_BINDING | GPUTextureUsage.COPY_DST | GPUTextureUsage.RENDER_ATTACHMENT,

});

device.queue.writeTexture(

{ texture: volumeTexture },

voxelData,

{ bytesPerRow: 256, rowsPerImage: 256 },

volumeSize

);

🔮 Step 4: Raymarching Shader (WGSL)

wgslCopyEdit@group(0) @binding(0) var volumeTex: texture_3d<f32>;

@fragment

fn main(@builtin(position) fragCoord: vec4<f32>) -> @location(0) vec4<f32> {

// Raymarch loop here: sample volume, accumulate alpha

let rayDir = normalize(vec3<f32>(fragCoord.xy, -1.0));

var pos = vec3<f32>(0.5); // Start in center

var color = vec3<f32>(0.0);

for (var i = 0u; i < 256u; i++) {

let sample = textureLoad(volumeTex, vec3<u32>(pos * 256.0), 0).r;

color += sample.xxx * 0.01;

pos += rayDir * 0.005;

}

return vec4<f32>(color, 1.0);

}

🕹️ Step 5: Add Interactive Controls

javascriptCopyEdit

// Control threshold, view rotation, slicing, etc.

document.getElementById("threshold-slider").addEventListener("input", e => {

shaderUniforms.threshold = parseFloat(e.target.value);

updateUniformBuffer();

});

You can also use dat.GUI or Leva for smoother UI.

🔗 Working Example Repo

👉 GitHub Demo: github.com/yourname/webgpu-volume-viewer

👉 Live Demo: yourdomain.com/webgpu-demo

The WebGPU API diagram is sourced from the WebGPU Native Examples repository by samdauwe, available under the MIT License.

Beyond Gaming: How WebGPU is Revolutionizing Scientific Visualization

🧠 The Big Picture

WebGPU isn’t just for cool graphics. It’s a paradigm shift. Here’s how it’s opening new doors in:

🧬 1. Medical Imaging & Diagnosis

- CT, MRI, PET scans rendered in real time — directly in a browser.

- Doctors could explore 3D patient data without native apps or slow installs.

- Can even run on tablets or phones with WebGPU support.

Example use case:

🩺 A radiologist views a lung CT scan on a secure hospital web app — zooms, rotates, slices in 3D instantly.

🔬 2. Scientific Research & Simulation

- Real-time volumetric data from physics, astronomy, chemistry labs.

- Visualize simulations (fluid, magnetic fields, dark matter structures) in the browser.

- Enables global collaboration — just share a URL.

Example use case:

🧪 A physics team analyzes plasma simulations across multiple countries through a unified WebGPU interface.

🏗️ 3. Engineering & Industrial Applications

- CAD-style viewers for internal scans (e.g., turbine blades, composite materials).

- In-browser inspection tools for non-destructive testing (NDT).

- Real-time feedback for quality control.

Example use case:

🔧 An aerospace engineer examines X-ray volume scans of carbon fiber parts on a factory floor tablet.

🧰 4. Developer Tools & Education

- Interactive anatomy lessons, chemistry volumes, fluid flow sims.

- Students learn by touching, slicing, and tweaking — not just reading PDFs.

Example use case:

📚 A student interacts with a real CT scan dataset in biology class — all in a browser window.

📈 What This Means for the Web:

- WebGPU bridges desktop-native power and web accessibility

- Cross-platform, hardware-accelerated, open-source friendly

- Democratizes high-performance computing — no installs, just URLs

🔄 What’s Coming Next:

| 🔍 Innovation Area | 🌟 Potential |

|---|---|

| WebXR + WebGPU | VR-based medical or engineering viewers |

| AI Integration | Run models in-browser for automated segmentation |

| Cloud-Powered | Hybrid compute + WebGPU frontend for heavy datasets |

| Streaming Volumes | Progressive loading of massive 3D data (e.g. terabytes) |

💡 Final Takeaway:

WebGPU isn’t just a graphics upgrade — it’s a fundamental shift in what the browser can do.

Chromium-based browsers support WebGPU, Safari and Firefox still catching up – WebGPU Samples | Mozilla’s WebGPU explainer | Google’s WebGPU blog

💬 Which feature excites you the most? Comment below!

💡 Stay ahead of the future! Follow us on:

Facebook | LinkedIn